+ Final Project -

Scene Edge Classification

Scene Edge Classification

There is often interest in identifying geometry in a scene. Edge detection is an established method of looking at scene geometry, but naive edge detection in a complex scene discerns little about the physical geometry; light and shadowing effects especially in outdoor scenes can cause edge detectors to mistakenly classify texture and shadow edges as geometry edges. However, with a sample of several images of a scene where the illumination is changing over time, one can correctly classify different scene edges by removing lighting effects and textures. We present a method of using intrinsic images and time varying intensity gradient to identify geometry edges, texture edges and shadow edges. We first remove shadows, then look at per pixel gradient intensity variance to segment normals in the scene, and use the boundaries of those segments to find geometry edges. The texture edges then are the edges which have not been classified as geometry or shadow.

+ Geometry Edge Classification

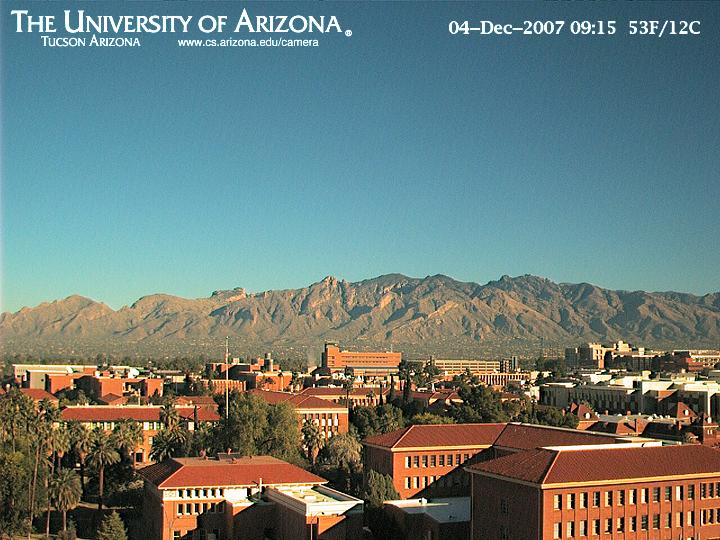

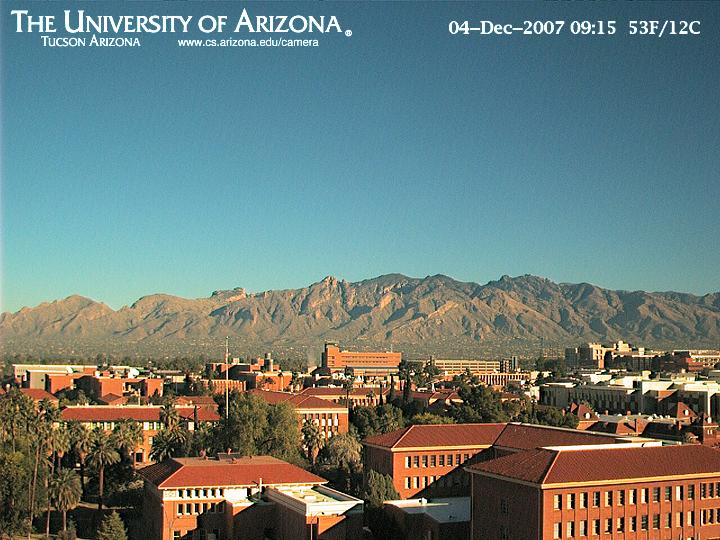

For our implementation, we used a sequence of images taken from a University of Arizona webcam, and from an idealized scene constructed in the lab. For the Arizona dataset, images were taken ~20 min intervals for 50 images spread over the entire cloudless day. The idealized scene in the lab used 14 images. Both sequences vary in time with lighting moving in an arch over the scene. Our edge segmentation starts out by using Weiss’ method of taking X and Y derivative gradients of a sequence of images. We first take each image and convert to LAB colorspace, using the L channel for all of our implementation. We then create a matrix of all the images in the sequences:

M = [ H x W x N ]

M = [ H x W x N ]

This image matrix then allows us to create a 1 x N time varying intensity vector for each pixel location in the image.

M(i,j,:) = {p(i,j)1 p(i,j)2 … p(i,j)N}

We then take the X and Y gradients (derivatives) of the each image in the spatial domain, and combine these gradients using a sum of squared method. This gives us two indications of the pixel identity, one in the intensity domain, and one in the 2D gradient domain.

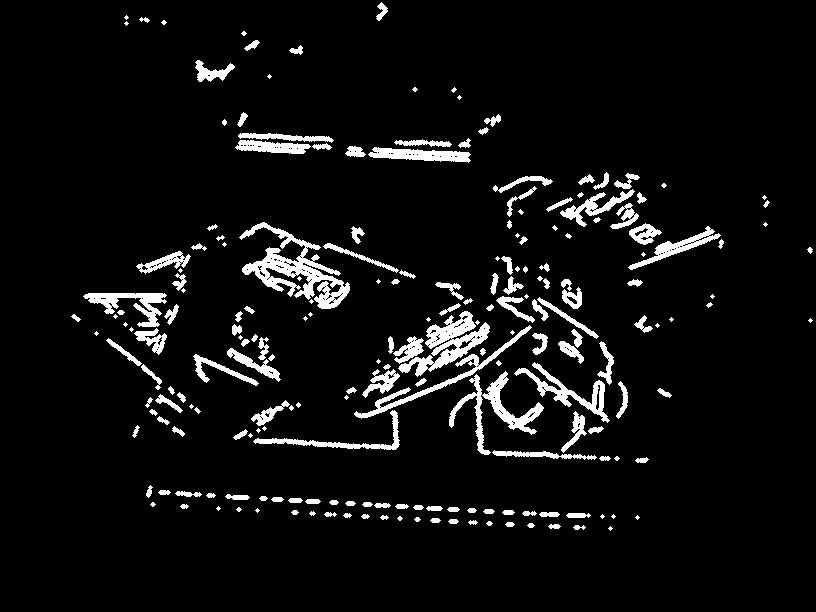

We can use the variance of these gradient intensity vectors to classify the edge type. By taking a variance measurement of each pixel vector and throwing away the top and bottom 25% of the data, we create an outlier rejecting variance. By taking a threshold of this variance, we can only select the pixels that correspond to edges which are ideally geometry edges

We can use the variance of these gradient intensity vectors to classify the edge type. By taking a variance measurement of each pixel vector and throwing away the top and bottom 25% of the data, we create an outlier rejecting variance. By taking a threshold of this variance, we can only select the pixels that correspond to edges which are ideally geometry edges

+ Shadow Edges

Returning to our original X and Y gradients of the image sequences, we can now take each of those sequences and median filter them in the time domain. Then using Weiss’ method, we can use the pseudo inverse to reconstruct the intrinsic image. This gives us an image that is totally devoid of illumination and is only composed of the reflectance of the scene. From there it is straightforward to subtract an original L image from the intrinsic image to get an illumination only image.

Using a method of thresholding and edge detecting this image (using a Canny edge detector) we are able to extract the illumination or shadow edges of the scene.

+ Texture Edges

Texture edges were found by taking a canny edge sequence of an L image and subtracting the identified geometry and shadow edges. The resulting edges must be from texture.

+ Results

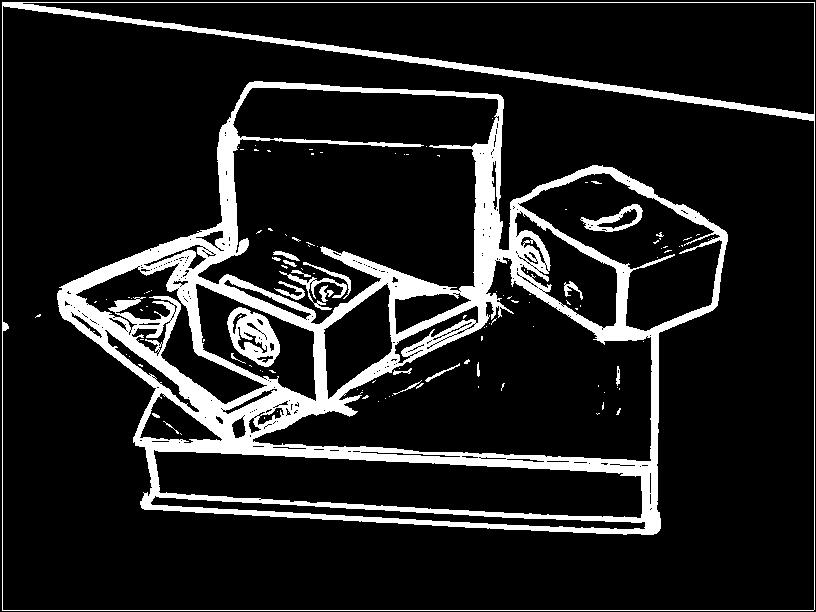

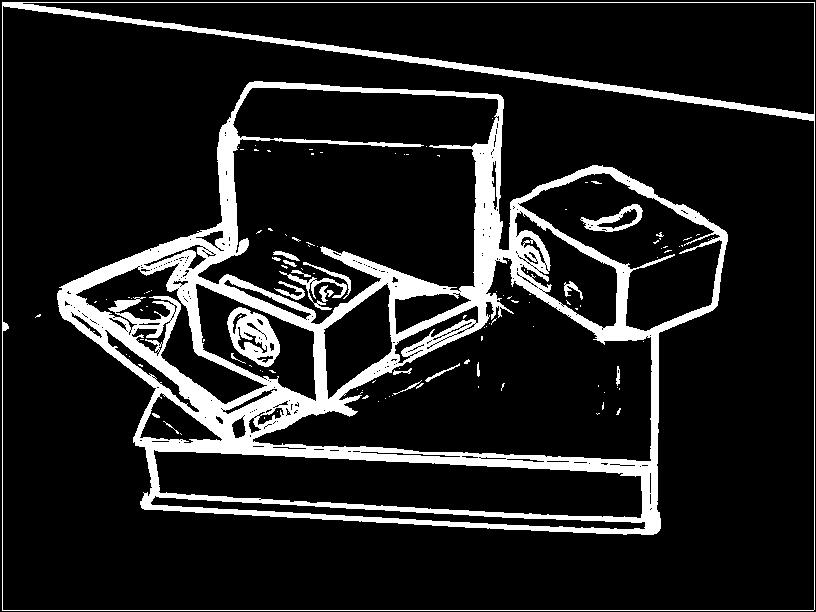

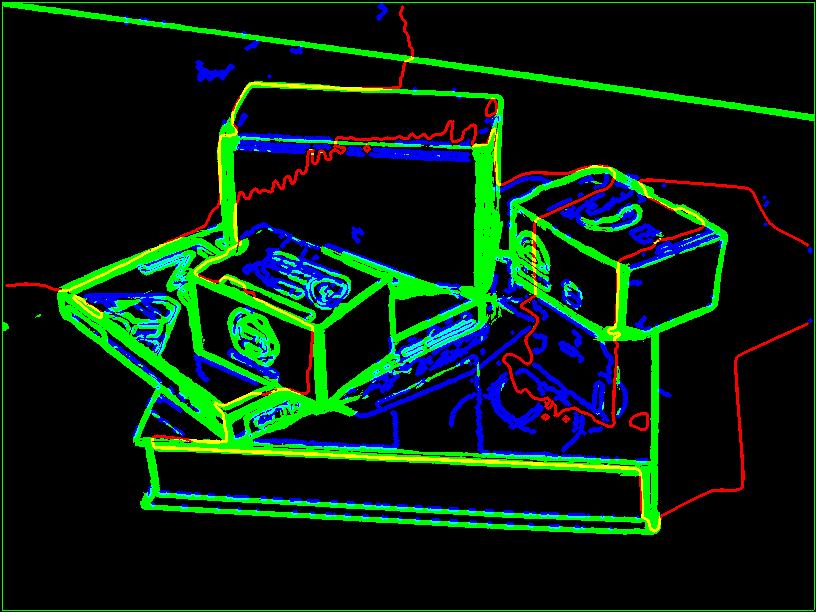

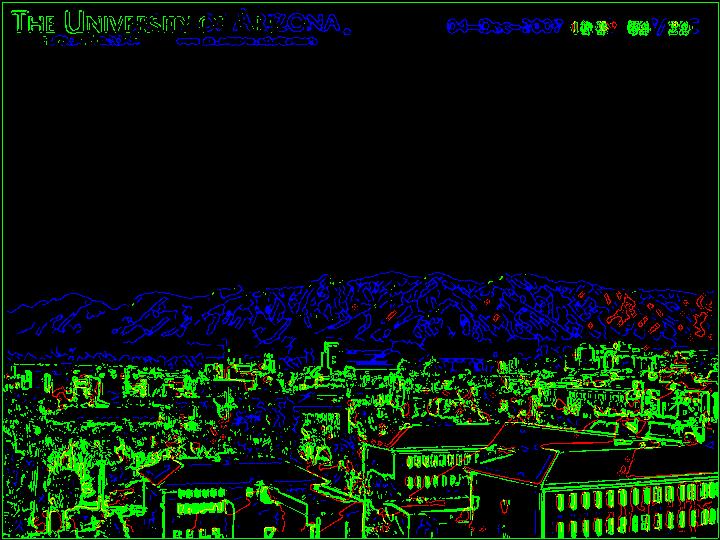

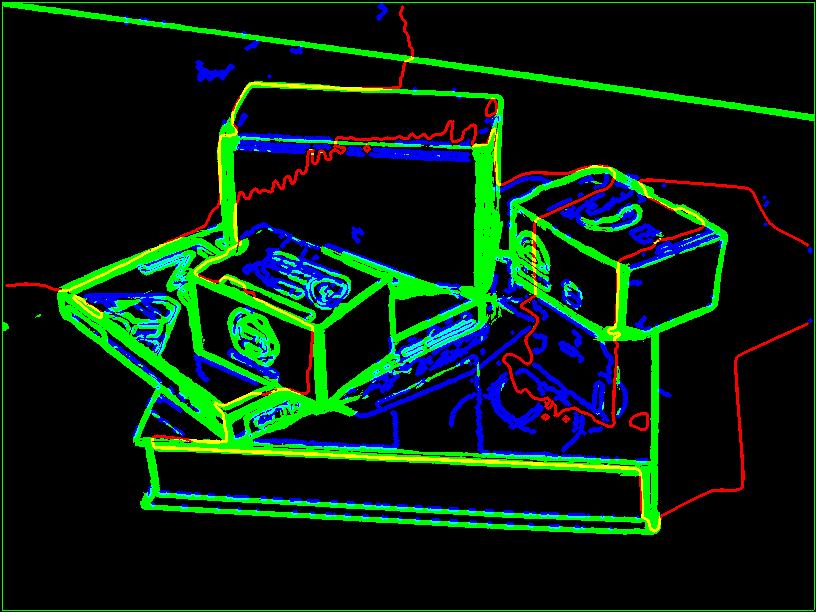

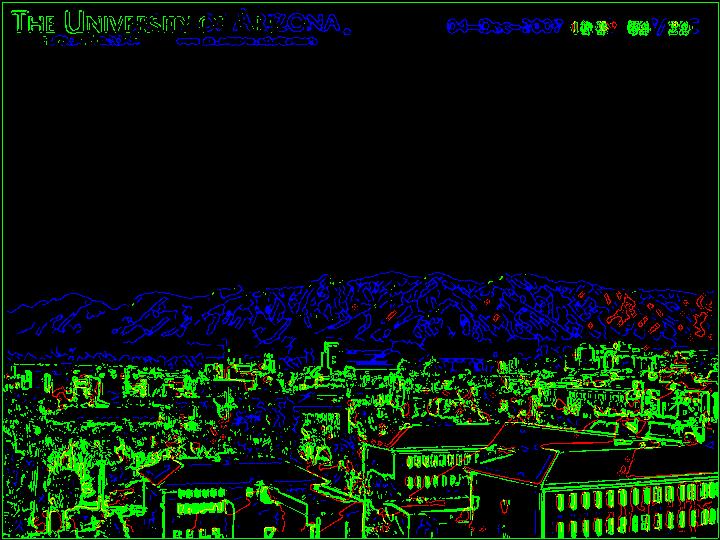

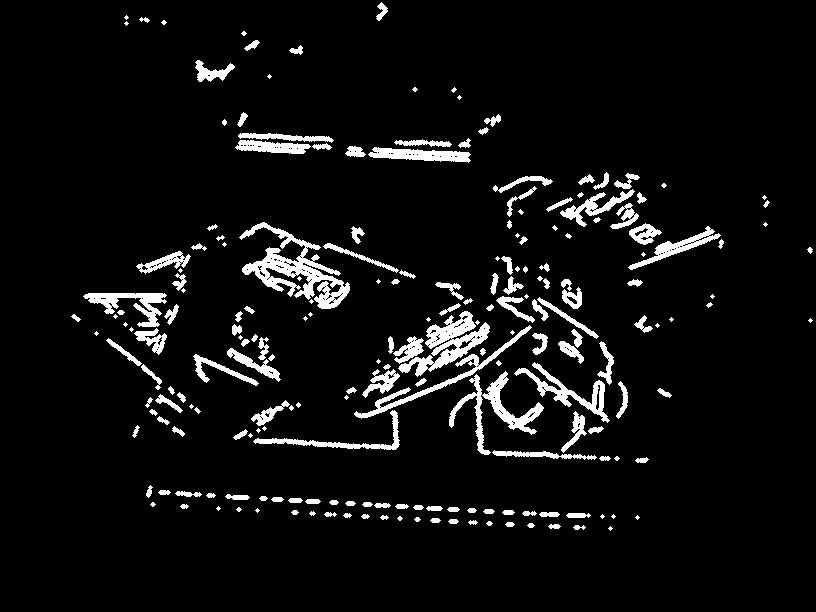

From these three classifications of geometry edges, texture edges and shadow or illumination images, we were able to create RGB composite images, where red shows the shadow, green shows geometry and blue shows texture edges. The results for the ideal scene and for Arizona are shown. There is relatively robust classification of geometry edges and shadow edges, though texture edges are somewhat misclassified with geometry. Also of note, the lettering in the Arizona image has been classified as geometry, but since there is no illumination change there, the lettering is considered texture. This suggests there are slight problems in the intensity variance thresholding method.

+ Edge Only Reconstructions

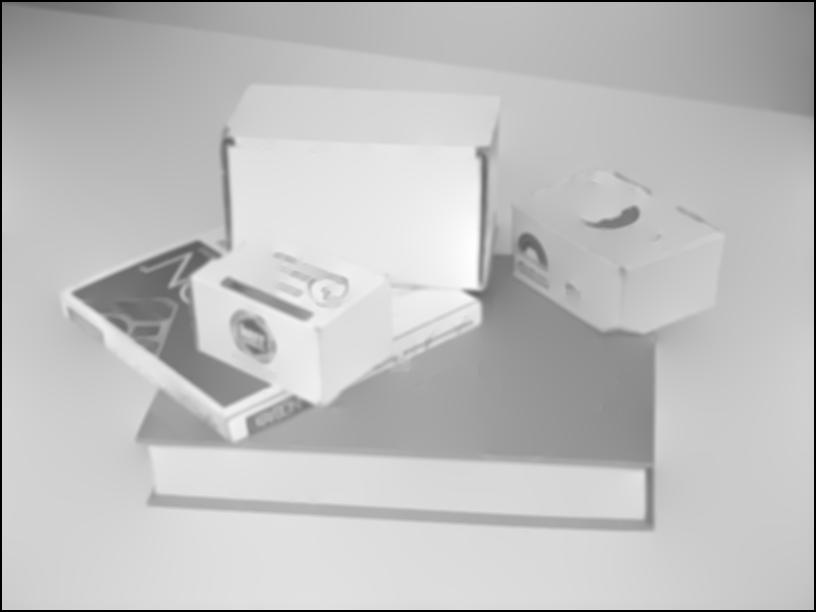

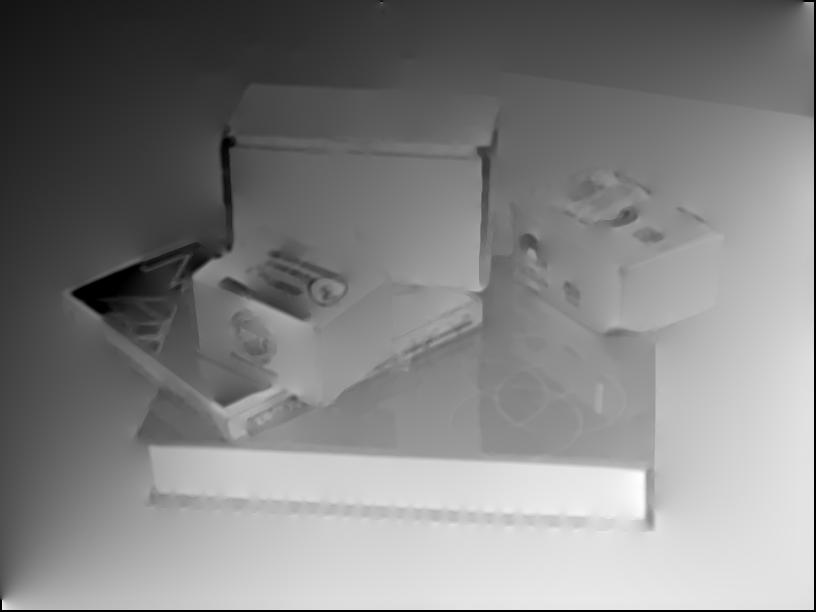

Another interesting result is the reconstruction images from edge only gradient space. To create these, we take our gradient median images and zero out everything except the desired classified edges, the reconstruct using Weiss’ method pseudo inverse. The results for geometry only, texture only and illumination only are shown in order. Ideally we would want the geometry edge only reconstruction to be basic shapes without any texture, but due to the misclassification of some texture edges, we still see some texture in the reconstruction.

© 2008 Kelleher Guerin

+ All Rights Reserved

+ Optimized for  + Site Map

+ Legal

+ Site Map

+ Legal